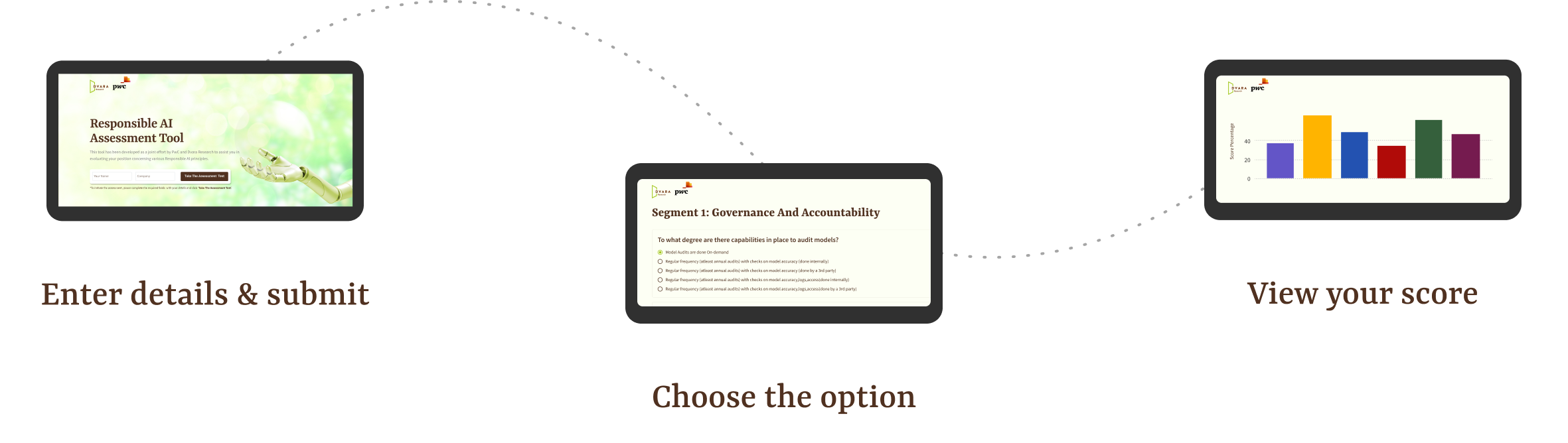

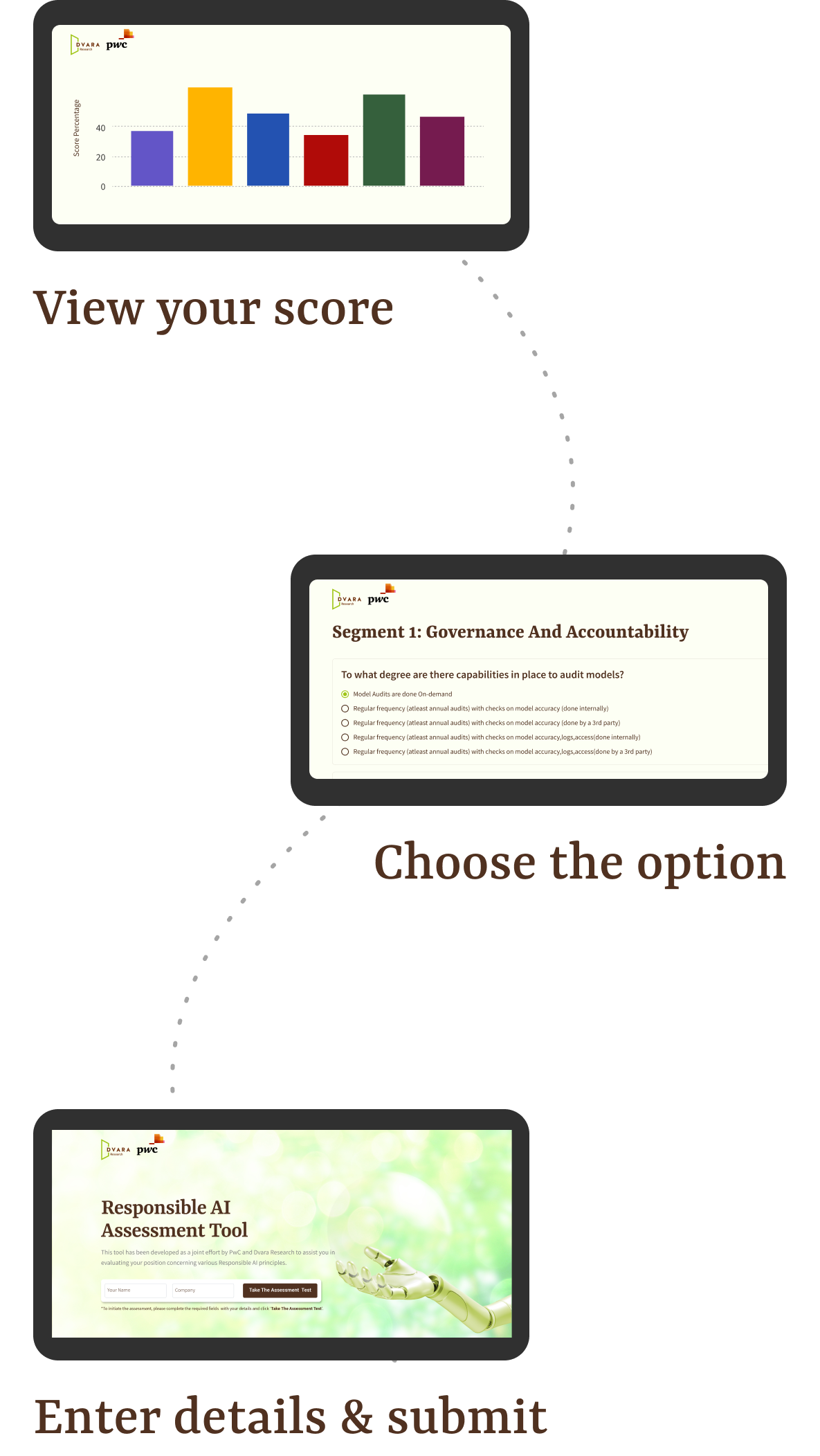

Responsible AI

Assessment Tool

This tool has been developed as a joint effort by PwC and Dvara Research to assist you in evaluating your position concerning various Responsible AI principles.

*To initiate the assessment, please complete the required fields with your details and click Take The Assessment

We recommend that you read more about Responsible AI principles before taking the assessment, for a better experience. The details of the principles are mentioned below.

Governance and Accountability

Governance: Governance emphasizes the need for effective frameworks for AI systems' development, deployment, and use, with regulation by external bodies and internal governance within organisations. It includes risk management, regular assessments, and stakeholder involvement, and mandates reporting to allocated boards for responsible AI adoption.

Accountability: Accountability ensures that those responsible are able to demonstrate their adherence to the attributes of Responsible AI. This allows for responsible actors to be held answerable for their roles in the functioning of the AI system.

Fairness and Non-discrimination

The attribute of Fairness and Non-discrimination mandates that AI systems be designed and implemented to prevent biases and discriminatory outcomes by fostering inclusivity, transparency, and regular monitoring throughout the AI lifecycle, while also complying with legal and ethical standards to protect against any form of discriminatory outcomes. Discrimination here would entail, for example, dissimilar credit terms to individuals who are alike in their creditworthiness. Some regulators such as the MAS have set out practical guidance for implementing fairness. This guidance emphasises that no two groups or individuals be treated differently without justification and the justifications thus provided should be frequently reviewed for accuracy.

Privacy and Data Protection

The attribute of Privacy and Data Protection seeks to safeguard individuals' privacy rights by requiring the collection, processing, and storage of data to be conducted with transparency and consent, adhering to relevant laws and ethical standards. It also emphasizes the importance of minimizing data usage, ensuring that data processing does not reveal sensitive information which is not relevant to the context and which the data principal would not have ordinarily shared with the business. Further it emphasizes ensuring data security, and providing individuals with control over their personal information, including rights to access, correct, and delete their data.

Transparency, Explainability and Contestability

Transparency: Transparency provides stakeholders with a broad view of the working of the AI system, tailored to their context. It includes the ability to trace the decision’s and the data’s origins, clearly identify AI made decisions and understand the limitations of the AI system. At the same time, the attribute allows the developers and deployers of AI to safeguard their Intellectual Property and Trade Secrets.For the attribute to be effective, it must provide information that is appropriate for the actor seeking it out.While transparency does not assure accuracy, it makes it more affordable by enabling an enquiry into the logic of the system and the origin of the underlying data.

Explainability and Contestability: Explainability means enabling people to whom the outcome of an AI system relates, to understand how it was arrived at. This entails providing easy-to-understand information, which empowers those adversely affected by the outcome of the AI to challenge it. We imagine the attribute to have baked-in reasonable restrictions in that, it only obliges the deployers of AI to make the output intelligible by sharing the underlying factors and logic (exogenous explainability) and not lay bare the intricacies of the model itself (decompositional explainability).Contestability timely human review and remedy if an automated system fails or produces errors, especially in sensitive contexts.

Protecting Human Agency and Instituting Human Oversight

This attribute seeks to privilege human autonomy by ensuring that the AI system remains accountable to a human. Further, it emphasizes that individuals be able to make informed and autonomous decisions regarding AI systems. This is achieved through the introduction of the element of human oversight in the functioning of the AI system.

Technological Dependability of the Al system (and its ability to respond to realized risks)

This attribute is a composite of three characteristics:

(i) Reliability: Reliability of the output is the ability of an AI system to perform as required, without failure, for a given time interval, under given conditions.In its essence, reliability ensures that an AI system behaves exactly as its designers intended and anticipated and repeatedly exhibits the same behaviour under the same conditions.Reliability is a goal for overall correctness of AI system.

(ii)Robustness and Resilience: Robustness, in the context of AI systems, refers to the ability of an algorithm or a model to maintain its accuracy and stability under different conditions, including variations in input data, environmental changes, and attempts at adversarial interference. Robustness ensures that the system can withstand unforeseen challenges and continue to function effectively.Resilience of AI refers to its ability to bounce back after disruptions.

(iii) Safety and Security: The attribute of safety refers to reducing ‘unintended’ behaviour in the functioning of AI. It aims to prevent unwanted harms to human life, health, property or environment.

*To initiate the assessment, please complete the required fields with your details and click Take The Assessment