In this write-up, we summarize our comments to the Report on AI Governance Guidelines Development [Report] in response to the public consultation by the Ministry of Electronics and Information Technology. Our full response is accessible here.

The response presents our thinking on the governance of artificial intelligence (AI). It is divided into two parts. Part A summarises our key inputs which are also presented below in the form of this write-up. Part B provides a section-by-section paragraph-wise detailed feedback as per the Ministry’s Consultation Form requirements. Our inputs are on following four themes:

1.Feedback on AI Governance Principles

The principles proposed for the governance of AI are rich and cover a range of aspects. To this list we recommend adding the principle of Contestability and Explainability, as an extension to the principle of Transparency. Explainability means enabling people to whom the outcome of an AI system relates, to understand how it was arrived at. This entails providing easy-to-understand information, which empowers those directly affected by the outcome of the AI to challenge it. We imagine the principle to have baked-in reasonable restrictions,[i] in that, it only obliges the deployers of AI to make the output intelligible by sharing the underlying factors and logic (exogenous explainability) and not lay bare the intricacies of the model itself (decompositional explainability).[ii] Contestability empowers users to challenge the decisions of the AI system. It gives users a say over the AI system and an opportunity to dispute its decision (as opposed to accepting it by default). It creates room for human review of the AI system and remedying erroneous decisions, especially in sensitive contexts.

Transparency, explainability and contestability obligations may differ across contexts. Globally, the extent of these attributes is being determined by the sensitivity of the use case, the implications of a misjudgement on the part of the AI (for instance, in the EU AI Act). Excessive transparency could create confusion or expose the AI models to exploitation or manipulation. excessive explainability could incentivize developers to reduce the number of variables in the model, reducing its accuracy.[iii] Thus, it is essential to create bounds around these attributes. Graded levels of explainability. Regulators such as the Monetary Authority of Singapore recommend that the sophistication of the explanation should match the expertise of the agent querying it.

In partnership with PWC India, Dvara Research has compiled a list of six principles of Responsible AI which can guide providers in their endeavour to ensure their AI practices are Responsible and the outcomes thus obtained Trustworthy. These principles are expanded upon in the comments to Section II.A

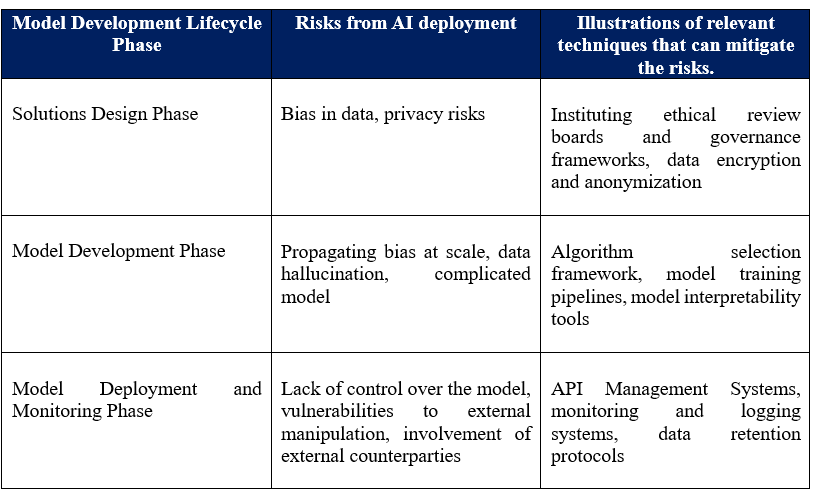

2.Feedback on Examining AI system using a lifecycle approach

We support the Report’s lifecycle approach to governing AI. In our forthcoming report on implementing Responsible AI, we set out the benefits of a life-cycle approach to AI. These entail enhanced the visibility over the various components of an AI system and the stakeholders responsible for each. It allows us to unpack the origins of risks and benefits along each link of the chain and allocate proportionate responsibilities to every actor. This visibility over the different components, their potential implications and the respective stewards also offers comfort to the regulator. This approach also allows for the distribution of legal responsibility. In our comments to section II.B, we highlight the priorities for governance at each stage of model development. These are briefly summarised in the table below.

3.Taking an ecosystem view of AI actors

A natural consequence of the lifecycle approach is taking an ecosystem-view of all actors involved in the value chain of AI. Both the lifecycle approach and the ecosystem-view are two sides of the same coin and one cannot be conceived in the absence of the other. Our preliminary research suggests that the ecosystem-view is also a necessary precondition for apportioning liability in the value chain of AI. Clarity on liability and contractual certainties will go a long way in preserving and nurturing momentum in the sector. However, we must contend with three key concerns when thinking of liability in the case of AI:

-

- The participation of several actors makes it difficult for the aggrieved to seek redress. The interplay of several actors—data providers, data brokers, software providers, hardware providers, and, third-parties with access rights, makes it difficult for a layperson to identify the cause of adverse algorithmic outcomes and the counterparty responsible for it.

- It is unclear what qualifies as a defect or a fault. Though a fault-based liability regime is a popular contender for a liability framework in the case of AI, it has its limitations. Autonomous systems that can cause unintended harm, question the mainstream understanding of defect and fault. For instance, when an autonomous system provides a decision different from that of experts, it is unclear if the system’s output can be qualified as faulty. In the same vein, it is also unclear if the expert can be held negligent for deferring to the AI if the AI is wrong and vice-versa.

- It is difficult to establish causality in the liability framework. Most AI outcomes are probabilistic and not deterministic. Our extant laws are deterministic, designed to be binary. They define what is allowed and not allowed. In case of AI, it is not possible to determine how an AI system will react to prompts given to it. It is the feature of AI to make connections in ways that may not have even been intended by the deployer, holding them strictly responsible for every transgression may not work. Deployers of automated and autonomous systems cannot control their functioning like deployers of physical, tangible products such as airbags in a car. Therefore, it is difficult to establish liability in these cases.

4.The need for transparency and responsibility across the AI ecosystem in India

We welcome the idea of a baseline framework for AI given the limitation of a risk-based approach for regulating AI. In our response to NITI Aayog’s Working Document: Enforcement Mechanisms for Responsible #AIforAll (Working Document) released by the NITI Aayog in November 2020., we discuss that the most pronounced limitation of a risk-based approach is its heavy reliance on probabilistic modelling. Although the modelling seems to work on paper, it may not stand the test of practice. A risk-based approach may in fact give a false sense of security and encourage people to take on bigger risks than they would have otherwise taken. Further, the approach focuses on high-risk cases, sometimes, to the exclusion of low-risk cases. Often, low-rated risks can be unstable and potentially accumulate and aggravate into higher risks.

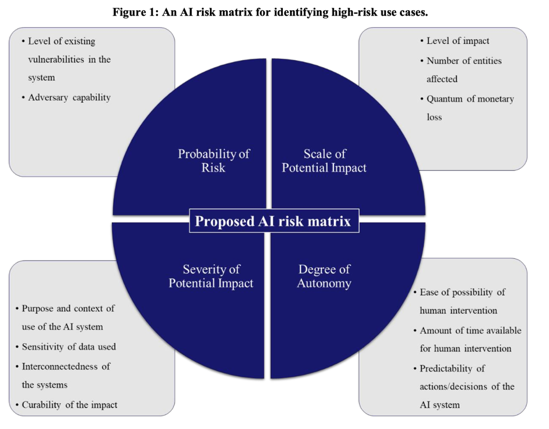

Yet, the utility of risk-based frameworks, when done so with appropriate caution cannot be downplayed. It can serve as an effective tool to complement expert discretion though may potentially be hazardous when relied upon exclusively. We propose a risk qualification framework that relies on four prongs:

- the probability that a harm can materialise from the malfunction of an AI system;

- the scale of the harm;

- the autonomy of the AI system (which captures the ability to control the emergence of the harm), and the severity of the harm.

These are expanded upon in the comments to section III.B.2 and are briefly summarised in the figure below.

[i] https://oecd.ai/en/dashboards/ai-principles/P7

[ii] https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3974221